Building a Streaming Social Media Analytics Platform

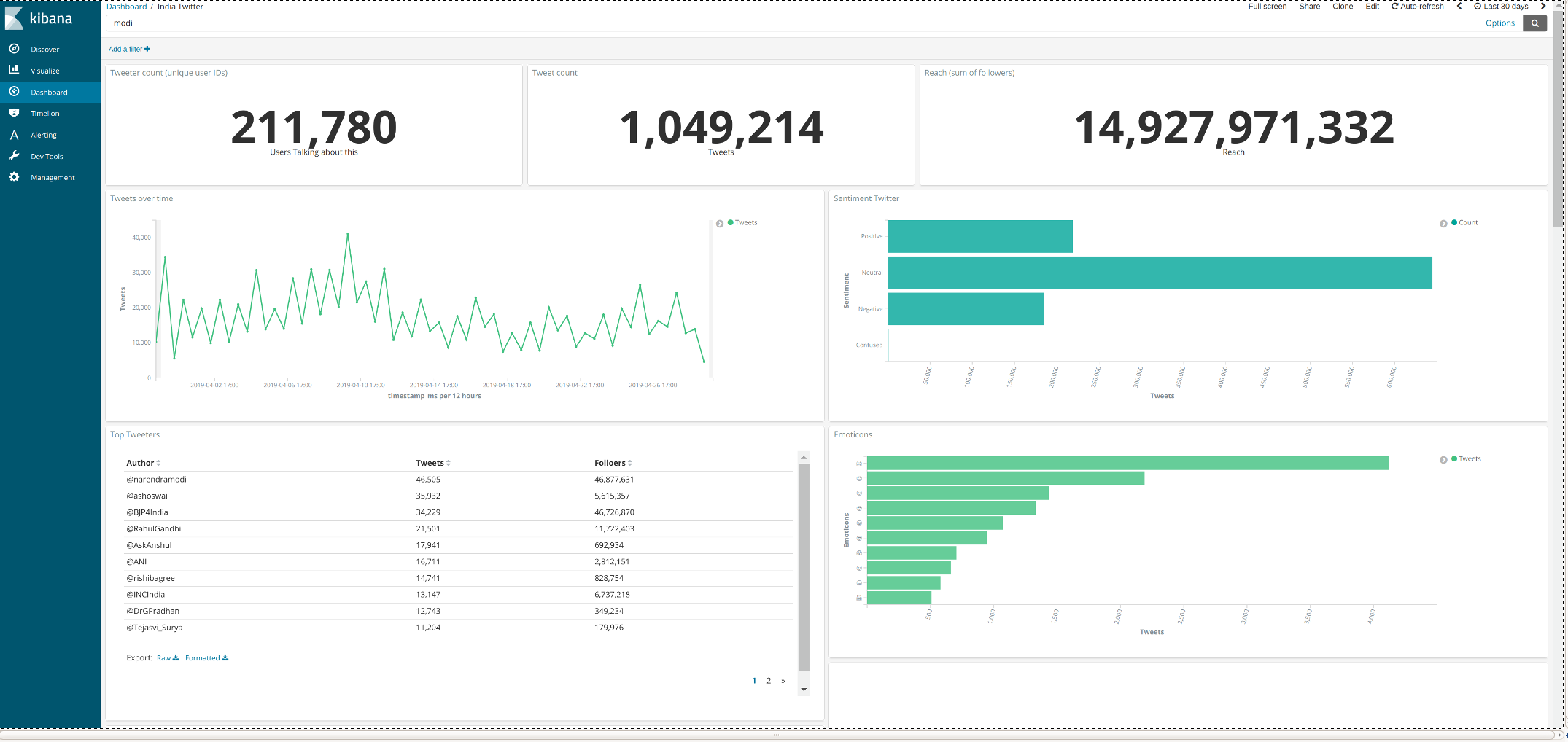

I wanted to track topics across a wide range of social media platforms in real-time. I looked around and most options were expensive and limited in scope to a few social media sources. I found this great AWS blog to get started. Thanks to the open source (and powerful!) Elasticsearch and Kibana The overall project took me 10 hrs or so end to end and viola! I had a streaming social media platform up and running with over 30 million records per month.

Setting it all up

The over all architecture which looks something like this:

Start by following all of the steps in the AWS blog as described and then tweak as needed. For tweaks, I found I wanted to make changes to the Elasticsearch mappings in the Twitter streamer. Since ES mappings can be tricky, its worth checking out some other examples, e.g. my code is here .

At this stage I’m assuming you have completed the above set up successfully and have:

- AWS command line interface (CLI) installed on local host.

- AWS EC2 instance running.

- You can see the tweets coming into your Elasticsearch instance.

Add more social media streams

If you have set up Twitter as per AWS blog you may want to make some tweaks and add some more social media sources.

Webhose is a great all in one almost live data source for news and blogs feed. The steps to setting it up are:

- Login to your EC2 instance.

- Install tmux.

$ sudo apt-get install tmux

- Clone this Github repo:

git clone https://github.com/silaseverett/aws-elk-data-stream.git

- Sign up for webhose and then go to your dashboard

https://webhose.io/dashboardand scroll down to the API key. Copy it. - Configure

confighose.pywith your webhose API key. Check out the webhose API playground for making the query string that fits your need.

$ cd webhose

$ vi confighose.py

Then paste your webhose API token.

- Set up the viritual environment for the webhose producer and activate it:

$ virtualenv my_env

- Start a tmux session

$ tmux

- Activate the virtual environment and ‘(my_env)’ should be showing on the prompt:

$ source ~/environments/my_env/bin/activate

- Start the webhose producer.

(my_env) $ python webhoseio_producer.py

The official code on webhose python client used for the producer can be found here.

Modifying the platform

When you want to then modify the analytics platform you have just built here’s some basic guidance. For example you might want to (1) enable changes to the search terms used for filtering web documents into Elasticsearch and Kibana and (2) basic maintanence of the tool in cases where it needs to be restarted.

Task 1: Modifying Search Term Filters

Modifying the search term filters requires logging on to the EC2 instance, then stopping the message producers (Twitter and Webhose.io), opening and modifying the producer files. So first logon to EC2 instance. Follow log on instructions above.

- Change/open directory twitter-streaming-firehose-nodejs

$ cd twitter-streaming-firehose-nodejs

- Edit the config.js file

$ vim config.js

Vim is a classic if not archaic editor as you can see, but it’s the one built in to Ubuntu. Scroll down to bottom of the file and you will see the ‘terms’. In order to make modifications:

hit 'i' key for insert

use arrow keys to navigate to the "terms" section

make changes

hit 'esc' then a colon ':'

then enter 'wq' to write to file and quit vim

(if no changes are desired enter 'q' instead of 'wq' to exit vim)

then hit return

- Changes will not happen until the Twitter producer is stopped and started again. See last section on starting and stopping the producers.

Webhose

- Open the webhose directory

$ cd webhose

- Edit the config file ‘configwhose.py’

$ vim configwhose.py

While in vim, you’ll find the terms in the ‘query_params’ dictionary at the top of the file. You can set the query params in accordance with webhose.io API playground output integrate box for Python.

Since we are now in Vim:

hit 'i' key for insert

use arrow keys to navigate to the "query_params" at the top

make changes

hit 'esc' then a colon ':'

then enter 'wq' to write to file and quit vim

(if no changes are desired enter 'q' instead of 'wq' to exit vim)

then hit return

- Changes will not happen until the webhose producer is stopped and started again. See next section for how to stop and start the producers.

Task 2: Starting and Stopping Data Producers

Log on to EC2 instance following directions above.

Stopping

- First, list all the existing tmux sessions

$ tmux list-sessions

- Bring up the tmux session that holds the producer windows (default is only one is available).

$ tmux attach-session -t 0

- Now in tmux, toggle to the second of three stacked windows on the screen

ctrl 'b'

hit down arrow once

- Kill the Twitter producer

ctrl 'c'

type 'exit'

Starting

- Change/open directory ‘twitter-streaming-firehose-nodejs’

$ cd twitter-streaming-firehose-nodejs

- Run ‘twitter_stream_producer_app’ with Node.js

$ node twitter_stream_producer_app

- End the tmux session by toggling to the first window

ctrl 'b'

then up arrow

and detach tmux:

$ tmux detach

Webhose

Stopping*

- List all the existing tmux sessions

$ tmux list-sessions

- Bring up the tmux session that holds the producer windows (default is only one is available).

$ tmux attach-session -t 0

- Now in tmux, toggle to the second of three stacked windows on the screen

$ ctrl 'b'

hit down arrow twice

- Kill the webhose producer (notice that webhose is running in Python2.7 virtualenv)

(my_env) $ fg %1

(my_env) $ ctrl 'c'

Starting*

- Go into webhose directory and run the producers py script.

(my_env) $ cd webhose

(my_env) $ python webhose_producer.py

- End the tmux session by toggling to the first window

ctrl 'b', then up arrow

and detach tmux:

$ tmux detach

***Note: the webhose.io producer is run from a Python2.7 virtual environment. To activate the env

$ source ~/environments/my_env/bin/activate

You will see (my_env) in the front of the command prompt when activated.

Important URLS to track along the way

CloudWatch

ElasticSearch

Kibana

S3

Lambda